Best Practices for Agent-to-Agent Authentication Copy

Oct 9, 2025

5

Matt (Co-Founder and CEO)

Understanding Non-Human Identity Risks in AI Systems

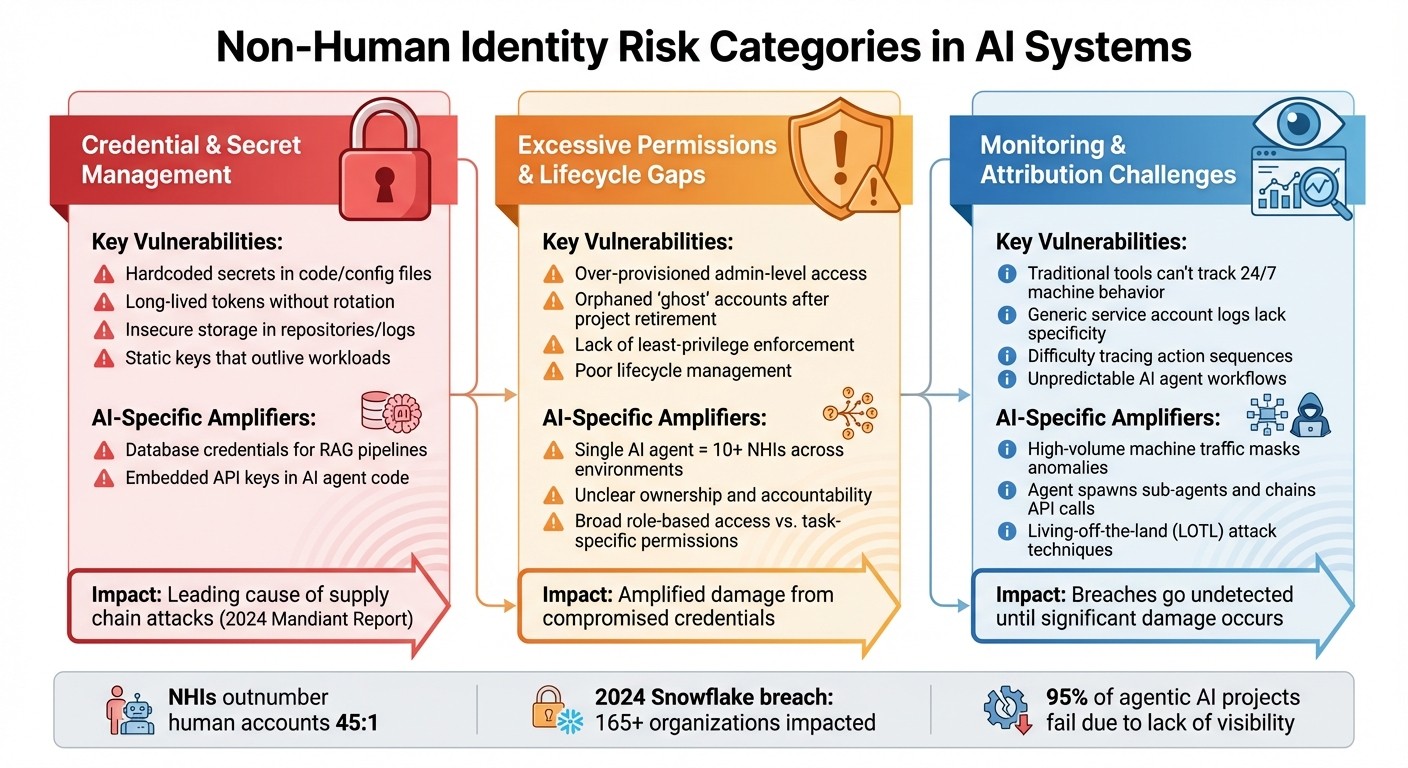

Non-human identities (NHIs), like API keys, service accounts, and AI agent credentials, are essential for automation and AI systems but come with serious risks. These identities now outnumber human accounts by 45:1, and their rapid increase has exposed critical security gaps. Common issues include hardcoded credentials, excessive permissions, and poor lifecycle management, making NHIs a prime target for attackers.

Key takeaways:

NHIs are often over-permissioned, poorly monitored, and lack ownership.

Breaches like the 2024 Snowflake incident exploited weak NHI controls, impacting 165+ organizations.

Traditional IAM tools aren't equipped to handle the scale or complexity of NHIs.

Solutions include least privilege access, short-lived credentials, and real-time monitoring.

Organizations must adopt structured frameworks and tools to manage NHI risks, ensuring accountability and compliance while reducing vulnerabilities.

Agentic AI and Non-Human Identity Risks | Mike Towers | NHI Summit 2025

Main Risk Categories for Non-Human Identities

{Non-Human Identity Risk Categories and Vulnerabilities in AI Systems}

Non-Human Identities (NHIs) introduce a range of risks that can be grouped into three main categories. These risks highlight vulnerabilities that traditional security tools aren't equipped to address, pointing to the need for new approaches to manage them effectively.

Credential and Secret Management Problems

Hardcoding credentials is a widespread issue in AI systems. Developers often embed API keys, database passwords, and service tokens directly into code, configuration files, or CI/CD pipelines. These static secrets, if stored in repositories, logs, or container images, are easy targets for attackers. According to the 2024 Mandiant Cloud Security Report, stolen API keys are a leading cause of supply chain attacks.

The problem worsens with long-lived tokens. Unlike humans, NHIs can't perform interactive logins or use multi-factor authentication, so organizations rely on static keys that rarely get updated. These credentials can remain active long after the AI agent or workload they belong to is retired, creating opportunities for exploitation through source code leaks, compromised build systems, or poorly managed secret storage.

AI systems also bring specific challenges. For instance, Retrieval-Augmented Generation (RAG) pipelines require database credentials to access knowledge bases. If these credentials aren't well-protected, they open up new attack surfaces.

In addition to mismanaged secrets, excessive permissions amplify the risks.

Excessive Permissions and Lifecycle Management Gaps

NHIs are often granted far more access than they need. Service accounts and AI agents frequently receive admin-level permissions or broad, role-based access instead of being restricted to the minimum necessary for their tasks. This overprovisioning increases the potential damage if credentials are compromised.

Orphaned accounts are another weak spot. When projects end or agents are retired, their credentials often remain active, creating "ghost" identities that attackers can exploit.

The scale of these issues makes governance a daunting task. A single AI agent might involve 10 or more NHIs across various environments, making it hard to track which identity is responsible for which function. This lack of clarity often leaves questions about ownership, purpose, and appropriate access unanswered.

Monitoring and Attribution Challenges

Traditional security tools are designed to monitor human behavior - things like work hours, interactive logins, and predictable workflows. But AI agents operate around the clock, generate huge volumes of machine traffic, and behave unpredictably, making conventional monitoring methods ineffective.

Attribution is another major hurdle in environments with complex AI operations. When agents spawn sub-agents, invoke tools, or chain API calls, logs often only show generic service accounts or tokens. This makes it difficult for security teams to answer critical questions like: Who initiated a database query? What sequence of actions led to an API call? Who approved access to sensitive data?

Attackers exploit these blind spots with living-off-the-land (LOTL) techniques, using legitimate NHIs to blend in with regular machine traffic. They can use valid credentials to steal data, create backdoor identities, and move laterally within systems - all while appearing as normal automated activity. Without real-time monitoring and detailed audit trails, breaches can go undetected until the damage is already done.

Risk Category | Key Vulnerabilities | AI-Specific Amplifiers |

|---|---|---|

Credential Management | Hardcoded secrets, long-lived tokens, insecure storage | Database credentials for RAG pipelines |

Excessive Permissions | Overprovisioning, orphaned accounts, lifecycle gaps | Admin privileges and multiple NHIs per agent |

Monitoring Challenges | Attribution difficulties, unpredictable behavior | 24/7 operations, LOTL hijacking |

Critical Risk Scenarios in AI Agent Lifecycles

This section dives into the vulnerabilities that can emerge at each stage of an AI agent's lifecycle: design, execution, and decommissioning. Each phase introduces unique risks that can be exploited if not properly managed.

Provisioning Risks During Agent Design

During the design phase, several risks can creep in, often due to shortcuts or oversights. One common issue is embedding default or hardcoded API keys directly into code, templates, or configurations instead of using a secure secrets manager. If these credentials end up in repositories or documentation, they become easy targets for attackers.

Shared service accounts add another layer of complexity. When multiple agents use the same credentials, it becomes nearly impossible to attribute specific actions to a particular agent. For example, imagine a customer-support bot integrated with a CRM system using a shared account that has permissions to "export all data." If a sensitive customer list is exported, it can be difficult to pinpoint which agent or workflow was responsible, creating both regulatory and incident-response headaches.

Broad third-party integrations can also expand the attack surface. Providing agents with organization-wide access - rather than limiting permissions to specific use cases - magnifies the potential damage if an agent or its connector is compromised. For instance, a data-processing bot might use hardcoded cloud access keys copied from internal documentation. If those keys remain active after a contractor leaves, they could later be exploited to spin up thousands of compute instances, leading to an unexpected and massive cloud bill.

These vulnerabilities during the design phase often set the stage for exploitation when agents are deployed.

Operational Risks During Agent Execution

Once agents are active, the risks become more immediate. Overprivileged credentials can be exploited for lateral movement across systems. Attackers might manipulate an agent through methods like prompt injection or by compromising its configurations. These agents, often connected to systems like CI/CD pipelines, cloud IAM, or ticketing platforms, can then be used to create unauthorized identities, alter roles, or deploy malicious workloads. Since agents operate autonomously, they can carry out bulk actions at machine speed - think mass record updates, bulk file deletions, or large-scale email campaigns.

A 2024 breach exemplified this when attackers used non-human credentials to gain access to customer databases.

Traditional monitoring tools often struggle to keep up with agent behavior. Non-human identities generate high volumes of activity around the clock, making it difficult to distinguish between normal operations and anomalies, such as unexpected data exports or unusual access times. Logs typically only capture the shared identity of the agent, without identifying the specific workflow or session involved, complicating incident response efforts.

Platforms like Prefactor aim to address these challenges by offering real-time visibility and audit trails that tie every action to a specific agent identity. This kind of attribution is critical for meeting regulatory requirements, notifying stakeholders of breaches, and ensuring accountability under U.S. regulations.

While operational risks can be mitigated through monitoring and governance, the decommissioning phase presents its own set of challenges.

Decommissioning and Shadow Identity Risks

When projects wrap up, teams often focus on disabling user interfaces or orchestration scripts but overlook the cleanup of associated credentials. API keys, OAuth tokens, SSH keys, and cloud service accounts are often left behind, remaining valid indefinitely. These "orphaned" credentials frequently retain broad permissions, such as access to production data or CI/CD pipelines, creating potential backdoors for attackers.

Shadow agents - experimental or vendor-managed bots operating outside formal registration or inventory processes - add another layer of risk. Even after a project ends, these integrations (like webhooks, API clients, or SaaS marketplace apps) may continue running unnoticed. In sprawling SaaS environments, this identity sprawl can grow unchecked because traditional offboarding processes, which are typically designed for human employees, fail to account for machine credentials.

To address these risks, organizations should implement a formal offboarding checklist for every agent. This checklist should include steps like revoking or rotating associated keys and tokens, disabling or deleting service accounts, removing roles and group memberships, and unregistering third-party integrations. Importantly, this process should be triggered not just when a system is shut down but also when a project ends, ownership changes, or a vendor relationship concludes. Maintaining an up-to-date inventory that maps each non-human identity to its owner, purpose, environment, and data access is essential to keeping these risks in check.

How to Build a Risk Assessment Framework for NHIs

After identifying lifecycle risks, the next step is creating a structured framework to pinpoint, evaluate, and prioritize those risks. Without a clear system, managing the sheer number of non-human identities (NHIs) in today’s cloud environments becomes overwhelming. Manual processes simply can’t keep up with tens of thousands of service accounts, API keys, and AI agents.

Creating an NHI Inventory

The backbone of any risk framework is knowing exactly what identities you’re dealing with. Start by gathering NHIs from various sources like cloud IAM systems (AWS, Azure, GCP), CI/CD pipelines, Kubernetes clusters, secret managers, API gateways, and SaaS integrations. For each identity, document key details such as type, owner, environment, associated systems, and lifecycle state.

Use a combination of top-down and bottom-up discovery methods. For instance, connect directly to cloud IAM APIs to list service principals and roles. At the same time, scan infrastructure-as-code files (like Terraform configurations, Helm charts, or GitHub Actions workflows) for embedded credentials. Secret scanners can be used on code repositories to detect hardcoded API keys. Tools like Prefactor can automatically register AI agents and their credentials, ensuring clear accountability.

The inventory should remain dynamic and up-to-date. Automate discovery and reconciliation processes to flag any identity lacking a clear owner or business context as a "shadow" identity. These shadow identities should be escalated for immediate review.

Once you have a comprehensive inventory, the next step is assigning a risk score to each NHI.

Evaluating Risk Likelihood and Impact

A NIST-style matrix is a practical way to assess risk. Each NHI is rated on two dimensions: likelihood (1–5, from Rare to Almost Certain) and impact (1–5, from Negligible to Catastrophic). These scores are then mapped onto a grid to classify risks as Low, Moderate, High, or Critical.

For likelihood, evaluate measurable factors such as: Are the credentials hardcoded, or are they stored securely in a vault? Are the tokens short-lived or long-lived? Is the NHI exposed to the internet or limited to internal use? Does it have broad permissions that span accounts or regions? Are there logging and anomaly detection mechanisms in place? For AI agents specifically, the risk of compromise increases if they can autonomously chain tools, modify infrastructure, or access sensitive datasets without safeguards.

When assessing impact, consider the consequences specific to U.S. industries. For example, in healthcare, a breach could result in HIPAA violations, while in finance, it might lead to SEC scrutiny. In retail, accessing payment data could trigger PCI DSS penalties. Operational costs also play a role - quantify potential downtime in dollars per hour and evaluate cascading risks, such as whether the NHI can create new identities, modify IAM policies, or alter AI agent behaviors.

Assign each NHI a risk score and establish remediation timelines. For example:

Critical risks (high likelihood and high impact) should be resolved within seven days with executive oversight.

High risks should be addressed within 30 days.

Medium and Low risks can be managed during routine hardening cycles.

With risks quantified, the next step is implementing Zero Trust principles to monitor and control every NHI.

Applying Zero Trust Principles to NHIs

Zero Trust for non-human identities means verifying every access request, even at machine scale. Start by applying the least privilege principle: grant each NHI only the permissions it absolutely needs, and review access regularly. For AI agents, this involves providing scoped, context-aware permissions governed by human intent and defined in code.

Replace hardcoded secrets with secure alternatives like managed identities, hardware-backed keys, and secrets vaults. Use short-lived tokens and enforce microsegmentation with policy-based access controls to limit machine-to-machine communication.

Continuous verification is essential. Deploy behavioral analytics and anomaly detection tailored to machine activity. Watch for unusual patterns, such as unexpected access times, irregular data exports, or privilege escalation attempts. Every NHI must be traceable to an accountable owner and a clear business purpose, with centralized logs that link actions to identities. For production AI agents, platforms like Prefactor offer real-time visibility, audit trails, and compliance controls. These tools help enforce Zero Trust at scale and address accountability gaps, a common reason why so many AI projects fail.

Finally, integrate NHI risks into your enterprise risk register and align controls with frameworks like the NIST Cybersecurity Framework and NIST SP 800-207. This ensures that NHIs are treated as integral components of your security strategy, not as secondary concerns.

Best Practices for Managing NHIs in AI Systems

After establishing a risk framework, the next step is putting practical controls in place to manage lifecycle risks effectively. These best practices focus on three key areas: managing governance from creation to retirement, implementing technical safeguards to protect credentials, and maintaining continuous monitoring to detect issues early. Together, these measures provide actionable steps to manage NHIs throughout their lifecycle.

End-to-End Lifecycle Governance

Every NHI (Non-Human Identity) should have a clearly assigned owner, a specific purpose, and a well-defined lifecycle. Begin with policy-driven provisioning that requires teams to answer essential questions before creating any NHI: Who is responsible for this identity? What resources will it access? Is it tied to development, staging, or production environments? How long will it be needed? These requirements should be built into your CI/CD pipelines and infrastructure-as-code templates to ensure no service account or API key can be created without meeting these standards.

Permissions should be as specific and limited as possible. For instance, grant read-only access to a single S3 bucket rather than broader permissions. If AI agents need to use external APIs, configure OAuth scopes with strict limitations and time-bound policies to reduce risks tied to compromised credentials.

Conduct periodic access reviews - typically every quarter - to identify over-permissioned or orphaned identities. During these reviews, owners should confirm that each NHI is still necessary, properly scoped, and complies with internal policies. Pay extra attention to high-risk NHIs with access to sensitive data, such as production systems, payment platforms handling USD transactions, or regulated datasets like PHI or PCI.

When projects or agents conclude, revoke credentials immediately. NHIs that show no activity for 30–90 days should automatically expire after notifying their owners.

Technical Safeguards for NHIs

Building on governance, technical safeguards are crucial for protecting credentials at every stage. Poor credential management is a major vulnerability for NHIs. Start by removing hardcoded secrets from source code, configuration files, and CI/CD scripts. Instead, use secure secret managers - such as cloud-native stores or HSM-backed services - that enforce access controls and maintain audit logs.

Replace long-lived API keys with short-lived, scoped tokens that automatically rotate for each request or task. Automated secret rotation should be integrated into your CI/CD processes to ensure agents can access updated credentials without interruptions.

Workload identity mechanisms offer another layer of protection. These mechanisms link identities to cryptographically verifiable workloads or devices, reducing the risk of credential theft. Instead of static keys, tie NHIs to specific pods, virtual machines, or runtime environments, and refresh them regularly. Always separate environments like development, staging, and production, and avoid sharing NHI credentials across them. Additionally, use network-level controls, such as VPCs or service mesh policies, to limit damage if an NHI is compromised.

Continuous Monitoring and Audit Trails

Effective monitoring of NHIs requires identity-aware telemetry that goes beyond standard logging. Track key events such as authentication details (who authenticated, from where, and with which credential), authorization decisions (what resource was accessed, what action was taken, and whether it was allowed or denied), and API call patterns or data-access logs for sensitive resources. By establishing baselines for normal NHI activity, deviations - like a sudden spike in database queries at 2:00 AM - can trigger alerts for investigation.

Audit trails should be comprehensive, tamper-proof, and focused on identity. Each event must include the specific NHI (e.g., service account, token ID, or agent ID), a standardized timestamp (e.g., 03/15/2025 02:32:10 PM EST), the action performed, the resource involved, the origin of the request (including IP address and region), and the outcome. Centralize logs in an immutable format - using write-once storage or log hashing - and ensure they are accessible to security and compliance teams under strict access controls.

For U.S. organizations, audit trails must align with regulations like SOC 2 CC6/CC7, PCI-DSS logging requirements, and state-level breach notification laws. Tools like Prefactor can generate detailed audit trails for AI agents, bridging the gap between agent activities and traditional audit frameworks. This is particularly useful when agents operate across multiple NHIs. Integrating these logs with SIEM and UEBA solutions helps correlate NHI anomalies with broader system events, supporting retention periods of one to seven years based on regulatory and organizational policies. This level of oversight ensures every NHI action is traceable and secure, reinforcing accountability at every step.

Conclusion

The rise of non-human identities (NHIs) is staggering, with these entities now outnumbering human users by as much as 45 to 1. From service accounts and API keys to workload identities and AI agents, these machine credentials operate independently, creating vulnerabilities that traditional Identity and Access Management (IAM) systems simply cannot address. A stark example of this risk was the 2024 Snowflake breach, where attackers exploited stolen machine credentials - credentials lacking multi-factor authentication (MFA) - to infiltrate databases across more than 165 organizations. The breach went undetected for weeks, highlighting serious gaps in accountability.

As organizations deploy AI agents at scale, managing NHI risks becomes non-negotiable. Structured frameworks are essential for addressing these challenges. Tools like NHI inventories, least-privilege policies, Zero Trust architectures, and lifecycle governance can help prevent credential sprawl, over-permissioned identities, and monitoring blind spots. Without these safeguards, organizations face heightened risks of breaches and failed projects. In fact, 95% of agentic AI projects fail, often because teams lack the visibility and controls needed to transition agents from proof-of-concept to production.

To mitigate these risks, organizations must treat machine identities with the same seriousness as human ones. This means enforcing policy-as-code, using short-lived credentials with automated rotation, and maintaining detailed audit trails that log every NHI action - capturing who (or what) performed an action, when it happened, and why. These steps not only reduce breach risks but also ensure scalability and compliance, especially for regulated industries.

For enterprises advancing AI agents into production, specialized platforms like Prefactor offer the governance infrastructure needed to bridge accountability gaps. Prefactor provides real-time visibility, robust audit trails, and compliance controls that translate technical actions into business terms. This enables organizations to securely scale AI deployments while maintaining operational oversight. By combining structured governance frameworks with purpose-built tools, businesses can leverage AI agents effectively without exposing themselves to the risks posed by thousands of unmonitored machine identities.

The future of AI deployment hinges on strong NHI governance today. Investing in proactive machine identity management is essential to scaling AI safely, meeting compliance requirements, and protecting against breaches.

FAQs

What are the key risks of non-human identities in AI systems?

The risks associated with non-human identities in AI systems are significant and multifaceted. One major concern is identity spoofing, where attackers pose as AI agents to deceive or manipulate systems. There's also the threat of unauthorized access, which could expose sensitive information and compromise security. Another pressing issue is the loss of control over autonomous agents, potentially leading to unpredictable or even harmful outcomes.

Moreover, when AI systems lack transparency, it opens the door to security vulnerabilities, data breaches, and even intentional misuse. These challenges underscore the need for strong governance and constant monitoring to keep AI systems secure and ensure they operate within established guidelines.

What steps can organizations take to manage non-human identities in AI systems effectively?

Organizations can streamline the management of non-human identities in AI systems by using a comprehensive solution like Prefactor. This tool provides real-time visibility, detailed audit trails, and policy-based access controls, ensuring security and compliance throughout the entire identity lifecycle.

With automated identity provisioning, scalable policy enforcement, and continuous monitoring of agent activities, businesses can maintain control while minimizing risks. Prefactor also integrates seamlessly with existing identity systems and supports deployment through CI/CD pipelines, making management easier and enabling robust governance and scalability for enterprise needs.

Why can’t traditional IAM tools effectively manage AI system identities?

Traditional Identity and Access Management (IAM) tools were designed with human users in mind, which is why they struggle to handle AI system identities effectively. These tools rely on methods like multi-factor authentication (MFA), CAPTCHAs, and static role assignments - approaches that simply don’t match the fast-moving, dynamic, and autonomous nature of AI agents.

AI systems need identity management solutions that are secure, adaptable to rapid changes, and capable of operating at the speed and scale of machines. They also require tools that can enforce policies, provide real-time visibility, and maintain control seamlessly. Unfortunately, traditional IAM tools lack these critical features, making them ill-suited for managing the complex demands of AI-driven environments.